Tracer: Spatial Sound Composer

VR Composition tool for 4DSOUND System

Fascinated by the intersection of cutting edge technology and music-making, for a long time I’ve been wondering what could VR bring to the world of sound design and composition. How can it enhance those workflows as a new design tool?

After being invited to participate as artist residents at 4DSound’s Spatial Sound Institute in Budapest, together with Gabor Pribek we’ve developed a new interface, where we're exploring the relation between the tangible process of drawing and spatial composition.

What is 4DSOUND?

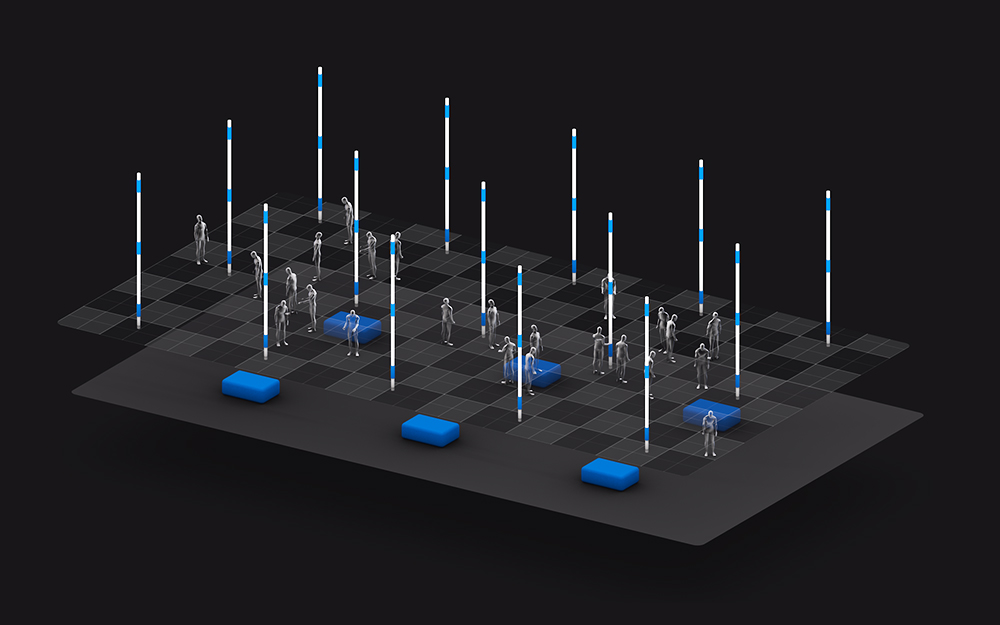

"4DSOUND is a collective exploring spatial sound as a medium. Since 2007, they have developed an integrated hardware and software system that provides a fully omnidirectional sound environment. In 2015, 4DSOUND founded the Spatial Sound Institute in Budapest, a permanent facility dedicated to the research and development of spatial sound."

In praxis, 4DSOUND's system is cubic grid array of omnidirectional speakers. The speakers together create an uniform, virtual sonic environment, in which artists create site-specific sound pieces, performances and experiences. Using their dedicated software and hardware, it is possible to animate the sound, being percieved as physically travelling through the whole area. Anyone present at the venue is free to walk around and listen, having a personal and unique sonic experience, relative to their position in the physical space.

Context

After my first-hand experience of the 4DSound system in Berlin in 2013, I was amazed by how immersive and intimate the sonic space feels – similarly to the Virtual Reality. That's why, we chose to approach our residency project slightly differently than most residents – we didn’t aim to create a sonic experience, but rather a tool, using which we could create them.

As designers, we’ve been particularly interested in what could Mixed Reality bring to the process of creation, and how would introducing a visual layer influence the whole design process of designing a sonic environment.

Note: we used VR because we had some experience already and a headset available on hand to prototype with. Getting rid of the cables and allowing the user to walk freely in space would be a plus, however at the time of develepment a sufficient device providing desired UX did not exist.

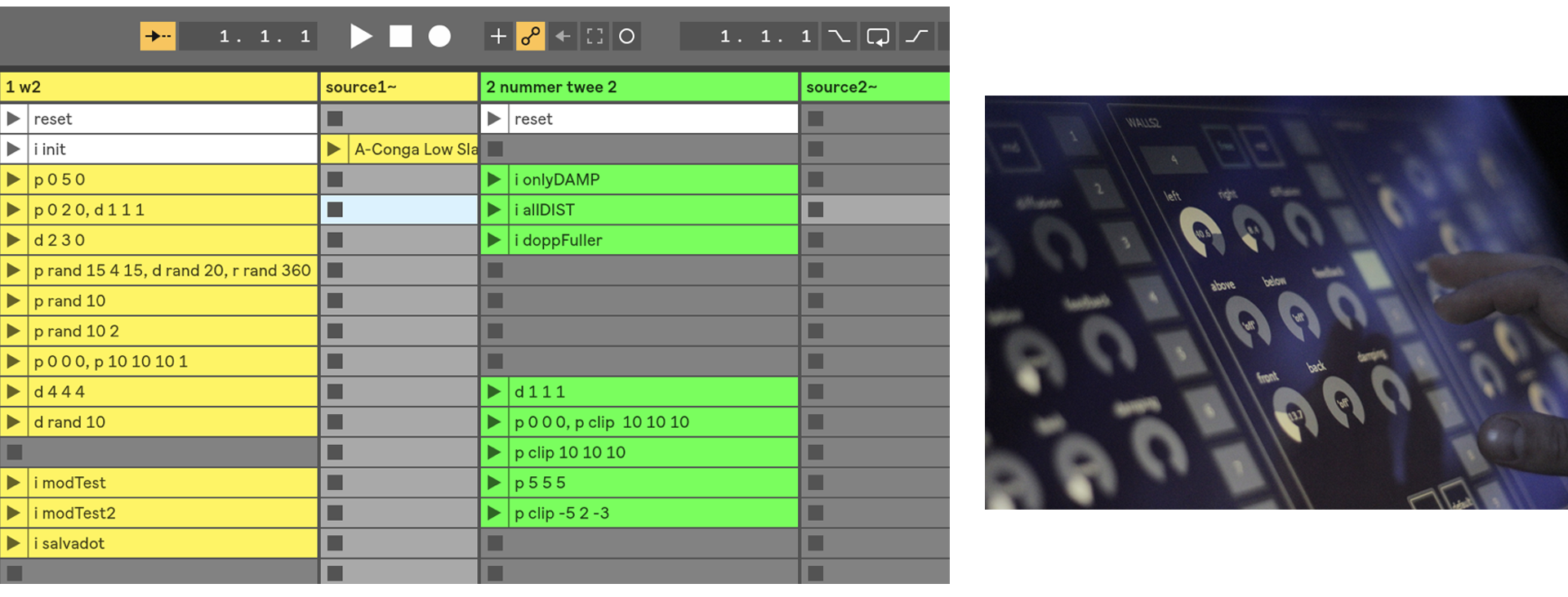

Current workflow: designing for 4DSound

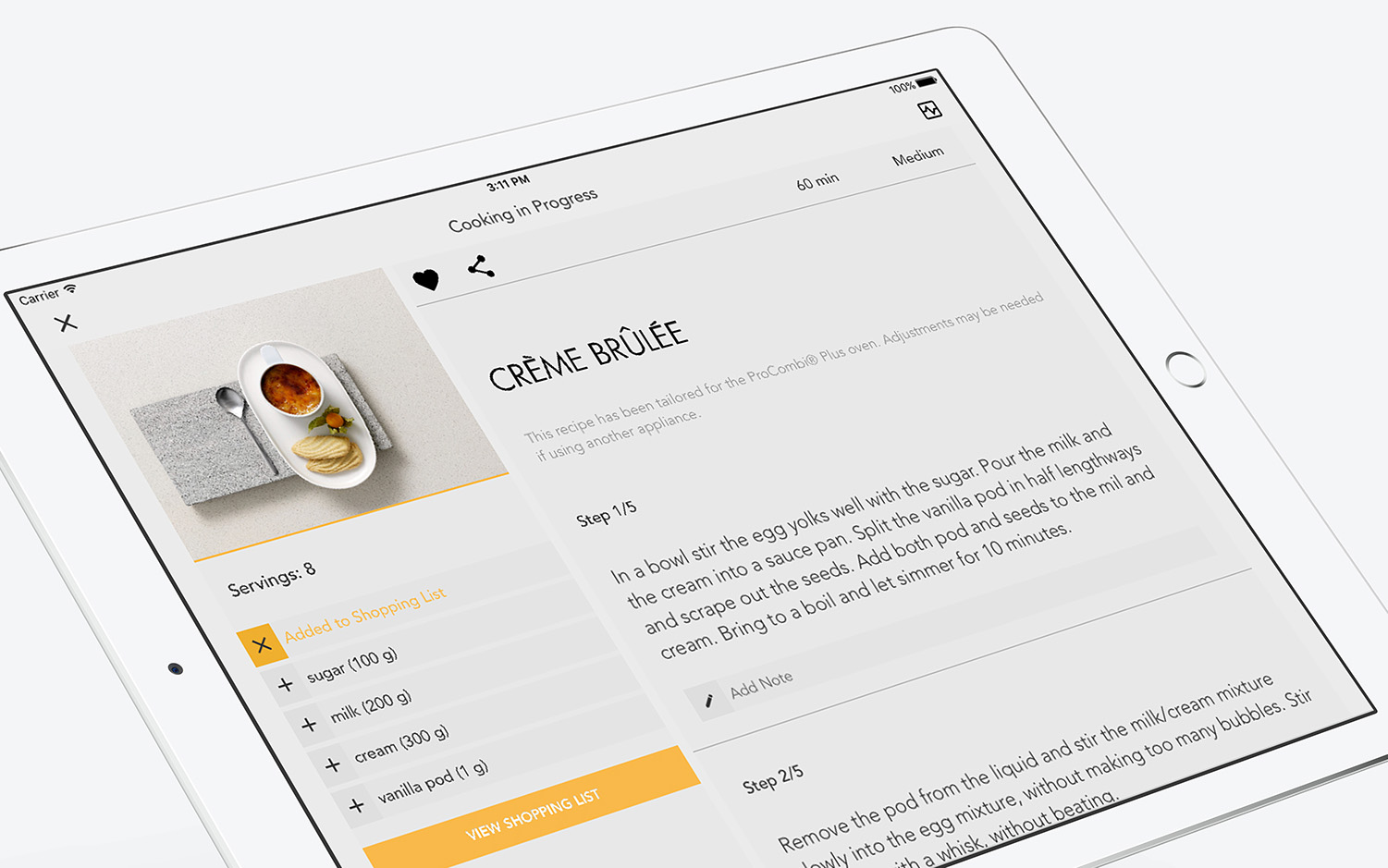

The most common interaction workflow is based on manual input XYZ coords (or simple, linear math functions) as names of MIDI clips in Ableton Live that is the most popular among artists working with 4DS. Additionally, an iPad is available to for real-time control of the engine's parameters (via the M4L plugin and a custom Lemur UI).

Artists can also develop their own software, control surfaces or UIs to control the 4DS Engine via OSC and that's how Tracer works too, but more on that later.

We observed, that the current workflow lacks any connection of the artist and the space. To change that we chose to use a concept that almost everyone is familiar with – drawing. That would allow us to have tangible control over the sonic environment the artist is present in, in real time.

A drawing operates as a visual clue, a missing link between the performer and the sound travelling in space. It serves as an additional anchor for the spatial awarness, provides a visual feedback and amplifies the human perception of sound.

How it all works?

Tracer consists of two parts: Ableton live set for sound and a VR interface developed in Unity, which is translating it to a 3D space.

Tracer therefore presents a somehow extended, immersive interface for a composers planning their composition on the 4DS System. It’s a prototyping tool for the artist, that lets them design the spatial composition using freely drawn paths.

Our design process

During the six months of the residency we’ve iterated on multiple concepts and interaction principles. Below you can see the first alpha prototype to prove the concept of drawing and its link to perception. This prototype was also used without 4D System, only with Unity's built-in audio engine and physical sound emulation (which is so far from the actual system).

In the first version, you were able only to draw, assign sounds to Tracers and control speed & playback modes.

Interfaces

Here we used purely 2D interfaces somehow resembling the use of a weird tablet fixed to your secondary hand. The main hand used a laser-pointer to control that 'tablet' that was not very convenient. After a first user testing, it was proved that using a 2D interface for this felt quite frustrating. So therefore we redesigned it completely, making it physical and spatial, also making it possible to pin different parts of UI to the physical space. We got rid of multiple screen navigation for assigning of sound, and re-worked faders to resemble the actual physical faders that you may know from mixers or other hardware control surfaces.

Navigation in space and interactions

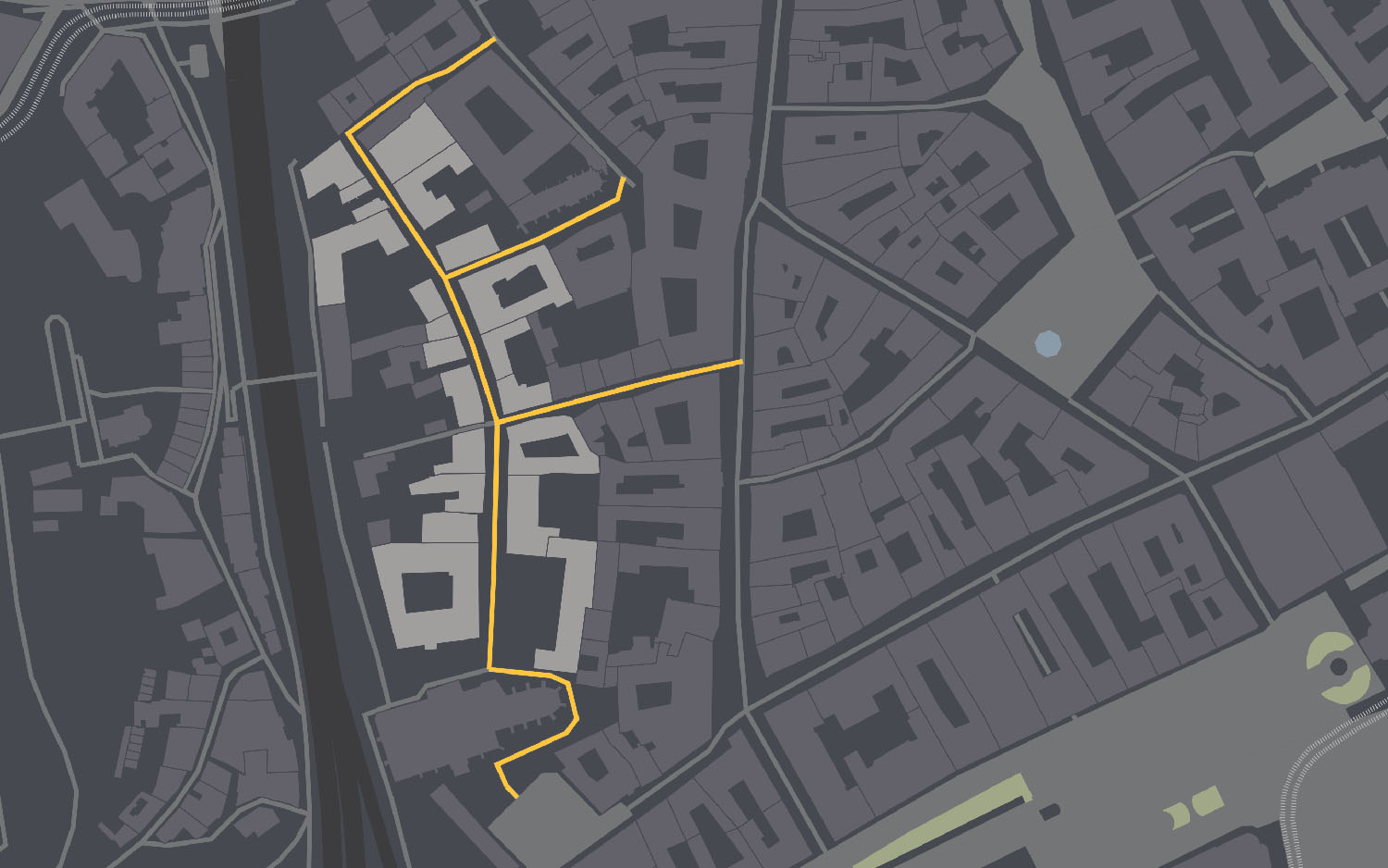

After putting on the VR headset, the performer is able to draw paths in the virtual 3D space. Then they are allowed to assign Tracers – sound emitting objects – to these hand-drawn paths. A Tracer then emits the assigned sound to the physical space. The performer has control over several sonic and spatial parameters, directly from within the VR. They are able to manipulate these in real time, making it possible to create live performances and experiences.

We’ve also added more controls over some sonic and spatial parameters. It’s possible to control the volume of the track, its play state, speed of Tracer following the path, play mode of the Tracer along the path, along physical dimensions of the sound in space and send the track through an effect chain.

During the design process, we were were looking how the integration of interfaces and spatial navigation is solved in other apps. Those of Tilt Brush and Gravity Sketch feel very natural, intuitive and straight forward. We decided not to reinvent the wheel and use similar navigation patterns in Tracer, making it more intuitive for users already acquainted with VR.

Presentation

We presented Tracer in September 2018, as a part of a festivald held in the Spatial Sound Institute. A full day dedicated just to Tracer, where public was invited to come and try Tracer first-hand. We collected feedback on the usability of the tool by interviewing participants right after their experience.

In June 2019 Tracer has been featured in the documentary film on 4DSOUND, presenting projects of selected artists and their take on working with the unique sonic environment.

Credits

Code: Gábor Pribék

Interaction & UX: Filip Ruisl, Gábor Pribék

Sound design, music & visual design: Filip Ruisl

Special thanks to Paul Oomen, Ana Amóros Lopéz

4dsound.net